Can kids and parents – students and tutors – have fun learning and teaching together AND create something that will contribute to advancing the state-of-the-art of computer vision and artificial intelligence research?

In 2009 when Dr. Li and kindred computer vision and artificial intelligence (CV/AI) researchers looked to the Internet for real world data to test their machine-learning programs doing full scene image recognition, user-tagged collections of images on sites like Flickr were about as rich a learning resource as could be found. Dealing with "dirty"/irrelevant tags in such image collections is a non-trivial challenge for these CV researchers. And it is certainly reasonable for these researchers' study designs to have assumed scarcity of (assumed-expensive) human resources for both materials prep and interactive tutoring/training of machine-learning programs.

By 2015 the 'Seeing Eye Child' Robot Adoption Agency plans to have both:

- a semantically-rich Fact Cloud for a non-trivial subset of the British Library Image Collection, AND

- a game-energized, crowdsource-powered human-tutor resource freely available as a CV/AI machine-learning program training resource

Through initial counsel and collaboration with active CV/AI researchers, we will refine and extend our game design and community dynamics to transition the 'Seeing Eye Child' Robot Adoption Agency into its mature and sustainable state.

At sustainable maturity, the Robot Adoption Agency gaming community will attract programming learner-players who will use the game's "sandbox" resource and community to develop and extend their CV/AI skills and interests. Some proportion of those programmer-players will develop a deep interest in human/computer interaction and contribute to the Robot Adoption Agency's gaming community by creating such components as new Open Source training/tutor workflow plug-ins. Those with interests driven more by game design and development will likely contribute presentation/interaction plug-ins to add fun and engaging robot character generators for our programmer-players' otherwise unseen running-in-memory agent programs. When we get to this level of community self-support, the game will be in its own good hands.

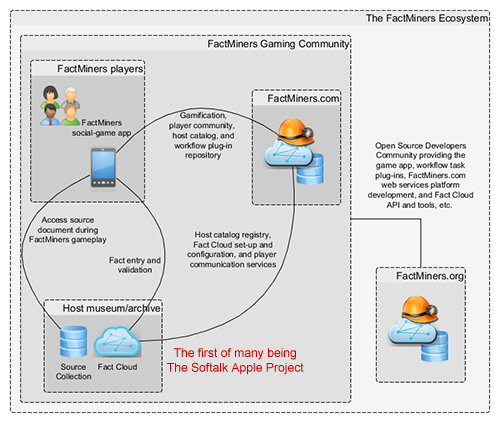

So, what's next? Will the FactMiners ecosystem ever be more than just an interesting idea that remains untried? I, for one, don't intend to let that happen. Step by step, we're moving forward. In the "FactMiners: More or Less Folksonomy?" article, we have reached out and begun collaborations with museum informatics professionals, both for the counsel of their domain expertise and to find kindred spirits interested in hosting FactMiners Fact Cloud companions for their on-line digital collections. In this article, we've described how the FactMiners ecosystem and its Fact Cloud architecture can accommodate image-based digital collections in addition to the print/text realm of complex magazine document structure of our project focus at The Softalk Apple Project.

In exploring this new use case within digital image collections for the FactMiners ecosystem, we have identified how our game design can "play" into the domains of computer vision (CV) and artificial intelligence (AI). So among our next steps along the path of bringing the FactMiners ecosystem to life will be to find some kindred spirits in the CV/AI domain interested in exploring just how fun (and useful) it would be to have a British Library Image Collection Fact Cloud companion and 'Seeing Eye Child' robot-tutor web service.

I believe if we can bring the active interest of a CV/AI collaborator to the table as we discuss this idea further with the good folks at the British Library Labs, we'll be a BIG step closer to opening the Internet's first 'Seeing Eye Child' Robot Adoption Agency courtesy of the collective efforts of the FactMiners developer community, the British Library Labs, and some as-yet-unidentified CV/AI researchers. Stay tuned...

Recent comments